5 product lessons from 2025 (in an AI world that stopped waiting for us)

Looking back, 2025 wasn’t the year AI quietly made product teams faster. It was the year it forced us to confront how we actually work.

AI moved from something we talked about to something embedded in everyday product decisions, and once that happened, a lot of comforting assumptions fell apart. Speed didn’t magically increase. Clarity didn’t arrive by default. In many cases, the exact opposite happened.

These are the five lessons that stood out most for me in 2025. These are lessons shaped by scaling airfocus inside a much larger ecosystem, by building with AI rather than around it, and by relearning what focus really means when complexity is no longer optional.

1. AI didn’t replace PMs; it exposed them

At the start of the year, I assumed AI would simply make product managers faster through better drafts, quicker analysis, and cleaner execution.

What actually happened was more uncomfortable.

As we started embedding AI into our workflows at airfocus, it became obvious that most so-called “AI failures” had very little to do with the models themselves. They were failures of judgment and shared understanding. Ambiguous goals. Missing context. Poor documentation. Unclear decision logic.

We found that AI didn’t smooth over those gaps. It amplified them.

We found that the real shift wasn’t learning how to write better prompts, but rather learning how to teach a system how our business thinks. That means being explicit about constraints, trade-offs, priorities, and intent. In practice, that shows up in how roadmaps are framed, how feedback is structured, and how decisions are explained, not just what gets built.

We may have once thought that the future PM skill would be prompt engineering, but in reality it is context engineering. You don’t need to write code, but you do need to make your reasoning legible to both humans and machines alike.

2. Integration beats innovation

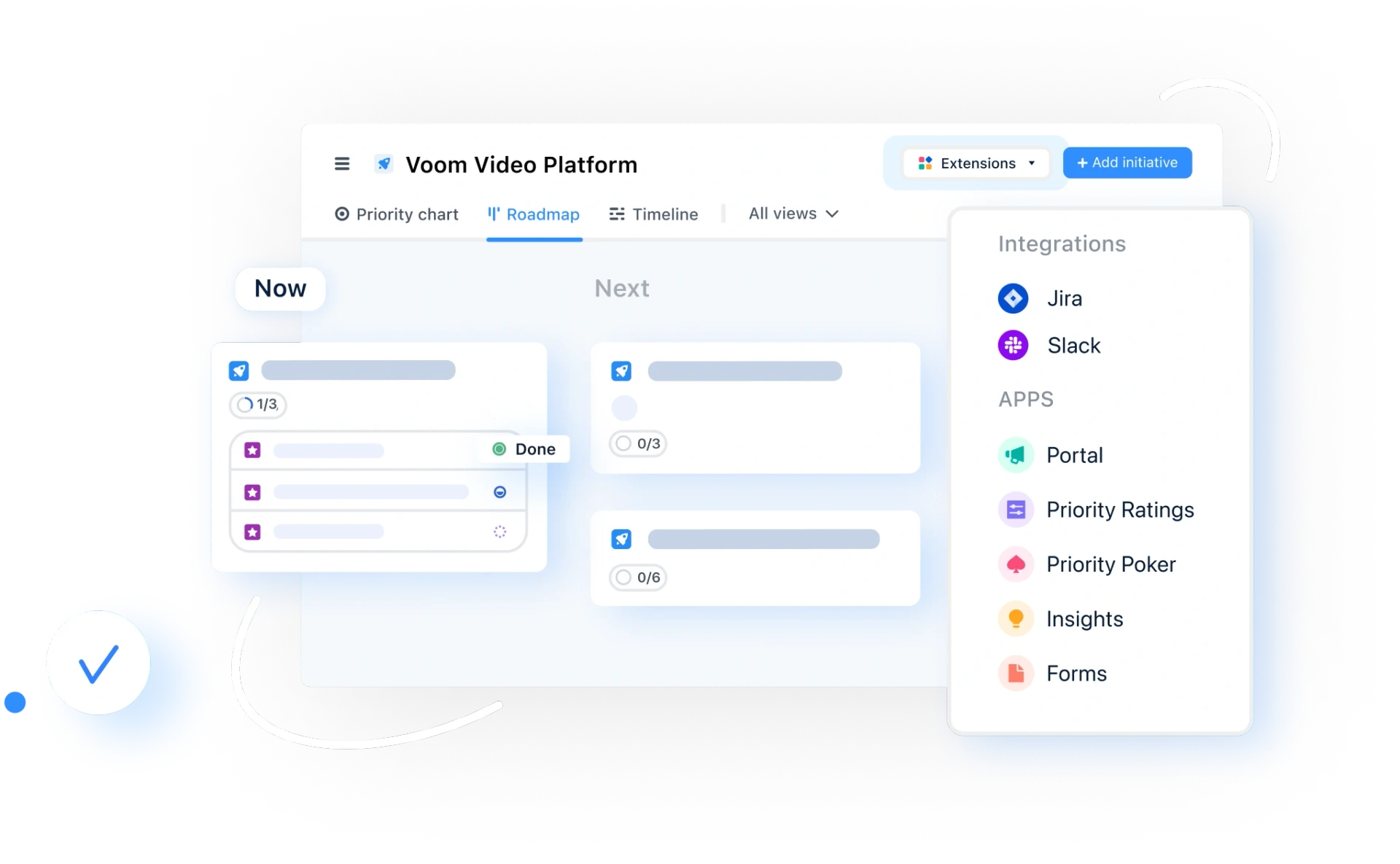

Building airfocus as part of Lucid’s broader product ecosystem fundamentally changed how product work feels.

When you’re operating independently, it’s easy to default to the question: What can we build next? Inside a larger system, the question becomes: How does this fit?

That shift sounds subtle, but it changes everything. Innovation stops being about volume of ideas and starts being defined by alignment and sequencing. Every new feature carries an integration cost, both technical and operational. And if you ignore that, velocity drops fast.

Some of the hardest product decisions we made this year were about not building things that looked valuable in isolation but would have added friction elsewhere. That discipline – knowing when not to build – is where many teams lose speed without realizing it.

In complex environments, progress comes less from novelty and more from coherence.

3. The 80/20 rule is your only real roadmap

2025 was a brutal reminder that complexity scales faster than clarity.

More customers, more use cases, more requests. It all adds up quickly. Several times last year, we found ourselves chasing this idea of completeness: filling gaps, covering edge cases, trying to satisfy every stakeholder concern at once.

Each time, the outcome was the same. The majority of impact still came from a small subset of work. The same 20 percent of features, insights, and customers drove around 80 percent of the value.

Roadmaps tend to collapse under the weight of good intentions. What starts as prioritization slowly turns into accumulation. The discipline isn’t identifying what’s valuable; instead, it’s protecting it from everything else.

A roadmap isn’t a catalogue of promises. It’s a decision-making tool. And when it stops forcing trade-offs, it stops doing its job.

4. Context is the new distribution

As AI agents increasingly act as the interface between users and products, discovery is changing.

We’re moving away from a world dominated by clicks, pages, and funnels towards one shaped by context windows. Instead of asking whether a product can be found, the more relevant question becomes whether it can be remembered by the agent making decisions on a user’s behalf.

That shift reframed how we think about everything from positioning to messaging and data. Distribution is now more than just visibility. It’s taken on the additional definition of being meaningfully understood. If your product’s value isn’t clear in context, it effectively doesn’t exist.

For product teams, this means investing less in surface-level optimization and more in structured, durable signals: what you do, who you’re for, and why you matter, expressed in ways machines can reason about.

5. Strategy is a constraint, not a plan

The hardest part of scaling airfocus inside Lucid wasn’t deciding what to do. It was deciding what not to touch.

As organizations grow, strategy often gets mistaken for a plan – a sequence of initiatives to execute. In reality, strategy works best as a constraint. It defines the boundaries within which decisions get easier and faster.

Once we started treating strategy that way, something clicked. Our teams spent less time debating direction and more time executing with confidence. Saying no became less political and more principled.

Taken together, these lessons changed how I think about product work heading into 2026. AI didn’t simplify the job; it raised the bar.

Malte Scholz

Read also

Experience the new way of doing product management

Experience the new way of doing product management