There has been a lot of hype around ChatGPT, followed by endless blog posts curated towards a variety of job roles, including product managers. Generative AI has revolutionized many industries and has the potential to automate certain tasks while saving people time - but is it really all that it’s cracked up to be? Will it really make life easier, or as many fear, even replace us?

The short answer: No.

The somewhat longer answer: Well, still no.

You have nothing to fear when it comes to ChatGPT or other AI tools for product managers. They will certainly not replace product managers, copywriters, or even customer support agents. Stop hitting the panic button, we are all going to be just fine.

Let’s dig a bit deeper into the topic and see how to use it to your advantage!

What is ChatGPT?

ChatGPT is the latest version of GPT, an artificial intelligence algorithm developed by researchers at OpenAI, an American company with a focus to “promote and develop friendly AI in a way that benefits all humanity.” It uses machine learning and neural networks to process language, and help improve products like search engines, image recognition software, and voice assistants like Siri.

By entering the right prompts into it, the AI can then generate human-like text in return. It can be used for a variety of tasks, such as language translation, summarization, and answering specific questions.

As part of a much larger subset of new tools known as “generative AI,” the algorithms can be trained over time. It starts by being trained on a large dataset, which could include text, images, or numbers amongst the type of data. This is then used to teach the AI model patterns and relationships between different elements within it.

Once the model is trained, it can then generate new data based on the patterns it has learned. For example, a generative AI model trained on a dataset of images can generate new images that are similar in style and content to the training data (like Dall-E, also by OpenAI.)

In a language model, the AI could generate new sentences by sampling from the distribution of words it learned from the training data. The model then uses this information to generate new text that is coherent and semantically meaningful.

ChatGPT-3 takes from existing data and then spits out a new version of what it has been inputted. Sounds pretty neat, right?

Generative AI has its limits

I too was entranced by the potential that ChatGPT and other generative AI tools could bring to the table, so I decided to give it a go. After playing with it for a few hours, there are two severe concerns it brings up: quality of content and critical thinking.

Quality of Content

I asked ChatGPT to write me a paragraph on what a product roadmap was, and how to use one as a product manager, and it gave me the following:

“A product roadmap is a high-level visual summary of the product strategy and plans. It is a tool used by product managers to communicate the vision and direction of the product”

So far, so good. It then added: “A product roadmap provides a clear picture of the product's evolution, the major milestones and features that will be delivered, and the timeline for their delivery.”

That is when I asked it to stop.

Now, if you’re a product manager, you hopefully know where this went wrong. The first part of the sentence makes sense - a roadmap is in fact a high-level summary that can be used to communicate vision and direction of the product. What we all know it isn’t: a timeline with features that communicates delivery.

Disclaimer alert: I tried a few other tools including Rytr.ai and Copy.ai - both with similar, disappointing results.

What ChatGPT did was take what it knows and spit out a new version of it. Unfortunately part of what it knows is useless copy-paste that has been written so many times it stops making sense - yet, to many, it sounds like the truth.

While a lot of buzz has been created around using generative AI to create blog posts and copy to help accelerate content creation, a human touch is clearly still needed. Unfortunately most highly-ranked SEO content is mediocre at best, and the tool is taking from that to generate new content for us. (I’m looking at all of you trying to use “kanban roadmaps” as a thing, tsk tsk)

To quote Ian Bogost, generative AI is in fact, “dumber than you think.”

Critical thinking

I won’t deny that it is still a pretty cool tool - but that’s all it is a tool. At best it can help get us started with writing when we’ve hit a creative wall. At worst, we risk an overreliance on a tool that lacks empathy and critical thinking.

While AI can generate pretty useful outputs, it lacks the human element that is essential - particularly in the product world. Product managers bring unique perspectives and experiences to the table, and they can make informed decisions based on factors that the AI is not aware of. These skills cannot be replicated or replaced, and they are crucial in ensuring that products meet customer needs and are successful in the market.

Above all, product managers are storytellers and communicators. If we start relying on tools to do the communicating for us, we take away a key skill that is needed, especially in a role where conversations must be had in different contexts, with different people, and with different settings.

“But it’s just writing, not speaking!”

Both are critical skills you must learn to develop. Effective writing is a key component of effective communication, whether in emails, reports, or presentations - all of which you will be doing as a product manager. If you’re able to express yourself well through writing, you will inevitably develop your speaking skills at the same time.

How generative AI can be helpful

All of the above said, generative AI can be helpful in automating tasks and analyzing data, which can often take up hours that could otherwise be used on other work.

Here are a few ways you can make the most out of it:

Customer feedback analysis

Generative AI can be used to analyze large amounts of customer data, including surveys, interviews, feedback, and even behavior over time. This data can then be used to collect a wide array of insights, inform product decisions and of course, save you time. This by no means replaces speaking to customers (please don’t think you can do this!) - but rather speeds up the time you would have otherwise spent trying to understand and sort through data.

Research

AI can help uncover answers to questions that may otherwise take a really long time to figure out. Google Search is great, but don’t you sometimes wish you’d be able to get an answer rather than sorting through 3 pages of results? This by no means replaces researchers or the need to run your own interviews, but it may shorten the time that it takes to do so (like understanding a market further and using results as supporting evidence.)

Predictive modeling

AI can be used to predict the future performance of various teams, including product adoption. For example, you can use predictive modeling to identify patterns in behavior, change in user trends, and raise concerns if there is a risk of churn.

Customer support

I am personally not a fan of replacing people with machines, nor am I a fan of putting customers through a chatbot that only adds to their already existing frustration. I do still believe product managers, like support agents, need to learn to develop their communication and empathy skills. That said, there are some really cool things you can do with generative AI to improve responses, like rephrasing sentences and finding the right tone of voice, especially when dealing with a large number of requests (hey, I get it, I worked in customer support too!)

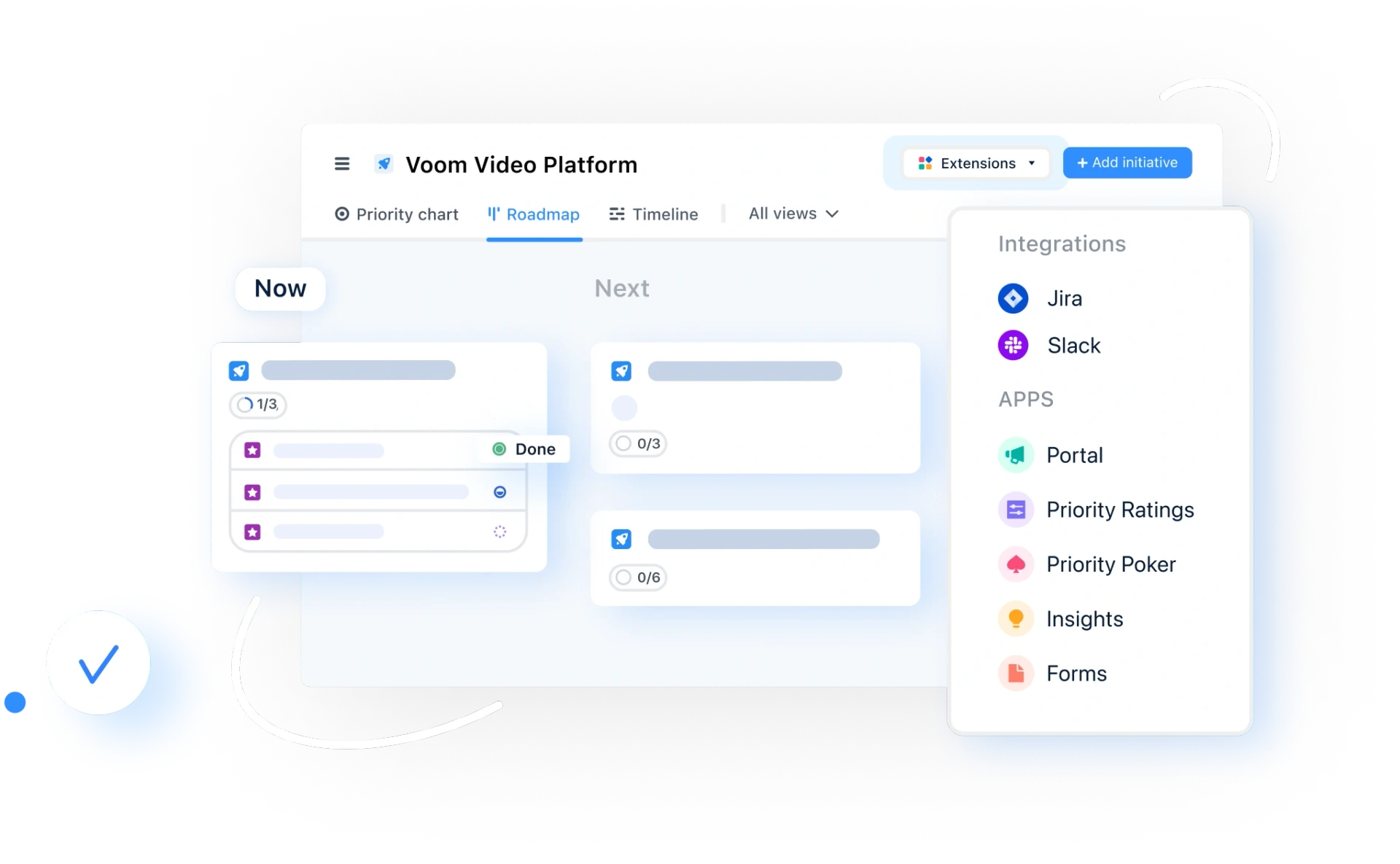

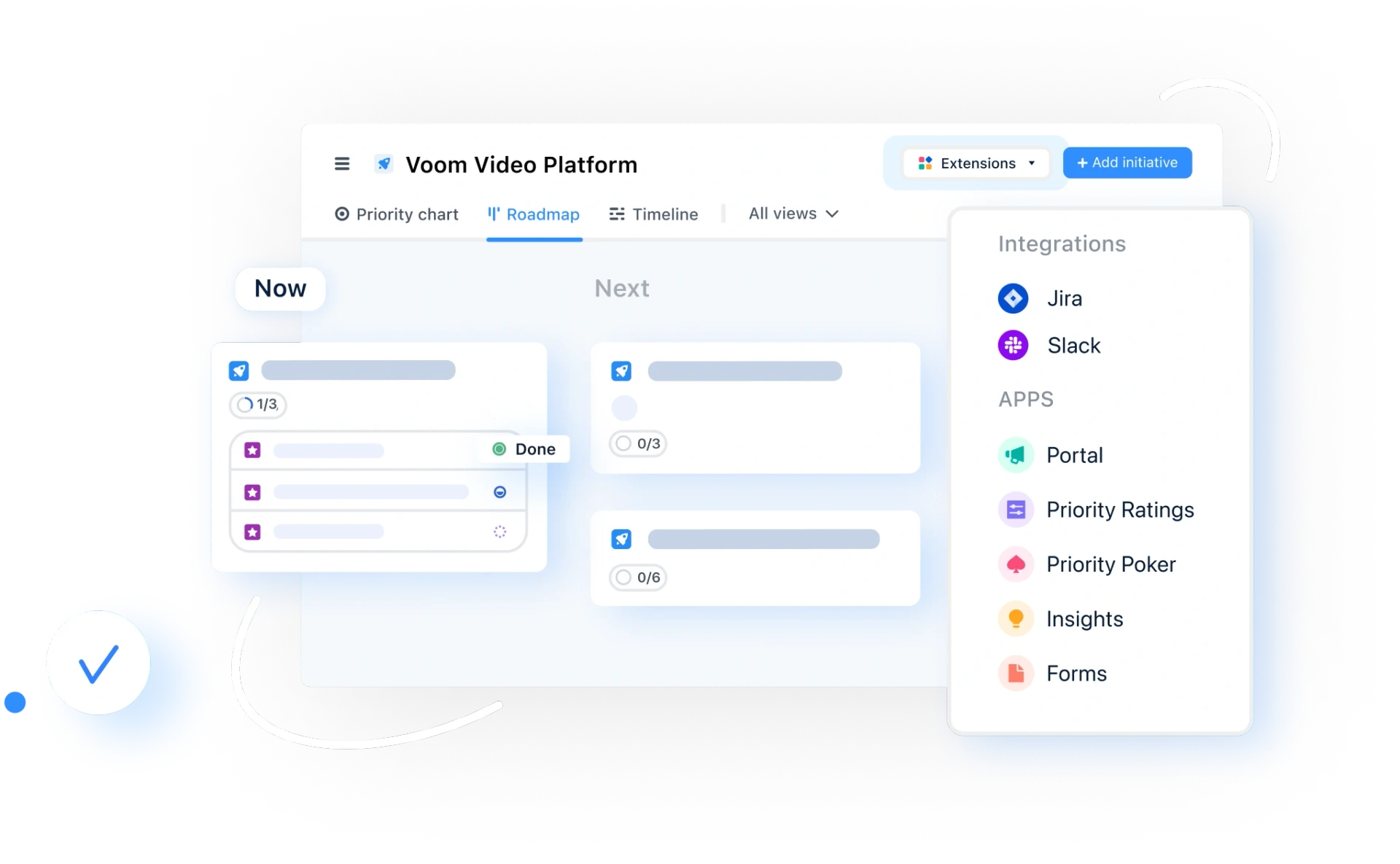

You can try out the new AI Assist from airfocus to explore all the ways AI can help product managers, with a simple slash command.

Read our range of product management case studies.

Generative AI: Proceed with caution

Overall, I think generative AI can be a pretty useful tool - but we still must proceed with caution. Not only do we risk the practice of developing key human skills, but there is of course the overall ethical concern behind using a tool that learns from others. It is because of this need of human input that there is an inherent risk behind the biases that it picks up.

“Some suggest that if AI reaches sentience, it would provide an entirely new perspective on humankind. Whereas so far our proclaimed mirrors are devised by human hands, we might claim that a sentient AI would have its own way of thinking that differs from humanity. In that case, a sentient AI could possibly discern aspects about us that we are either unable to already detect or that land on some other dimension that we’ve never conceived of. A counterargument is that if this sentient AI arose at the hands of humanity, it would seemingly not be able to go beyond what humankind has established in it. The counter to the counter-argument is that AI might find a means to branch further out. You can keep walking that path until the cows come home.” Lance Eliot

Welcome home, Skynet.

Andrea Saez

Read also

Experience the new way of doing product management

Experience the new way of doing product management