29 Mar 2023

The Responsibility of AI: Who is Accountable for the Results of Predictive Models?

Product ManagementOver the past months, we have witnessed a mass adoption of AI-based tools that generate images, have conversations, etc. We know, however, that businesses have been using this type of technology to advance their data models, process copious amounts of data, advance their products, and grow in market value.

The potential of AI (and all its subfields) is immense: it saves you time, it powers your thoughts to go further and faster than ever, it allows you to perform the most incredible tasks you can fathom due to its capacity to process and learn from data in so little time.

It also has the potential to be harmful in ways we haven’t yet considered because fortunately, we aren’t (all) purposeful malicious people looking to actively harm others.

The big question we all have been thinking about is: who should be accountable when predictions turn out to be harmful?

First things first - let’s set the scene

Predictive modeling

Predictive modeling (or predictive analytics) is a method/process; machine learning (a subset of AI) is a technology/tool. The goal of predictive modeling is to create models, based on historical data and statistics, to predict the future.

Predictive models become very powerful when supported by machine learning (ML), as ML “learns” from the data that is fed. This allows for far more advanced and faster data processing that can be very useful in cases like identifying fraudulent transactions, for example. Knowing when you’ll need to increase stock due to weather and lifestyle changes, what kind of resources will be needed in a hospital, based on population growth, or predicting an individual’s credit rating are other examples where predictive models are applied.

Responsibility and accountability

While responsibility is a pretty international word, you’d struggle to find a good equivalent for accountability (at least across the Latin-based languages). A person who’s responsible is tasked with getting the work done; a person who’s accountable will own the consequences of that job. To put it in product terms, responsibility is related to output, accountability covers the outcome.

This is an important distinction here because, by setting this context, we should be able to learn who are the best people to bring into the conversation when we are troubleshooting some of the unintended results.

It is a data problem

At heart, this is a data problem. When we have data problems, we debug them by breaking down the data pipeline: input, transformation, and output.

Data input

A few years back everyone seemed to be looking for a data scientist. I remember having conversations with my data scientist friends about how most of these companies thought they needed a data scientist, even when there was simply not enough data to work with.

For most of these models and technology to work you need to have A LOT of data.

The amount of data needed should get you wondering:

Where is all this data coming from?

How’s it being generated/harvested?

To get enough data, how far back do you need to go? And how wide?

Is all this data relevant to answer the question we’re after?

How’s it possible to “control” this data?

If this isn’t currently a transparent conversation within your team or organization, there has never been a better time to start. If for nothing else, tech companies have been under a lot of scrutiny recently and public trust has been in decline.

For example, if you’re working for an organization that’s looking to serve a subset of the population (like a lending or insurance product), using a set of data that only refers to a single person archetype is harming your customers and will damage your business. It would be the same thing as looking to predict the weather in the southern hemisphere, using data patterns from the northern hemisphere - it makes no sense.

This kind of unsystematic approach to the data that funnels your company is the start of the problem.

Remember, “garbage in, garbage out”.

Data transformation

As for data transformation, it includes everything that happens between “raw data'' to “final results”.

Preparing the data is also transforming it. Once you remove data from one context to the other, clean it, and merge it to other data sources you’re already creating a whole new set of data that gains a wholly different context, even before you can apply any kind of modeling.

Then, the process and models used to analyze and process the data should be contextual to the problem at hand. Being clear about the different models that can be used and understanding the potential impact they can create is also a good conversation to have.

Remember, “all models are wrong, some are useful.” - George Box (1976)

The assumptions these models are based on should be questioned too.

Similar to the argument of not having a single view of your customer and mapping it across all your customers, the people making the assumptions should not reflect a single view of the population.

Recently, a collaborative journalism institution, Lighthouse Reports, uncovered a story about a “black-box” fraud assessment tool and how much of its predictions are actually harming the most vulnerable in society.

Testing as you develop is essential. Your standard testing based on scripts won’t be enough, though. As you’re developing the results should be tested with a proactively human and empathetic approach.

This is where a lot of teams have to benefit from D&I (diversity and inclusion) resources. Getting the team to align on inclusive principles is a great first step into making sure the data is being tested with the best intentions in mind.

Data output

“If you torture data for long enough, it will confess to anything” - Ronald H. Coase

The point of this quote is to highlight the fact that information shouldn’t be taken at face value. As you could see, the context in which data is collected to how it’s used will have an impact on the output.

How, where and to whom the results are presented should also be part of the equation when we speak about “data output”.

Sometimes, the scale at which the results are distributed prevents a better framing and “control”, but this is where the caveats (confidence levels, width of research, etc.) and context need to be set.

It is everyone’s responsibility to question how the data produced has arrived to them. While it might be overwhelming, we have witnessed, at a global scale, how information can be dissimulated by sources we once deemed to be reliable.

Even within your business, for example, data like sales predictions in larger corporations have a global impact.

From the materials that need to be sourced, shipped, and sold; all the way to the revenue predictions, tied to stock valuation and market impact - quite the headache, right?

We’ve been treating the sales predictions exercise as banal, but the reality is that there can be damaging ramifications to this exercise. In the first instance, risky predictions can determine the success or end of a whole business. Then there are all other satellite and dependent businesses to consider and, ultimately, our planet.

So, who’s responsible and/or accountable?

A great part of a product person’s job is to break problems down. When breaking down the data problem into its different stages and workflows, allows for a better assessment of who should be accountable for what when it comes to AI and predictive modeling topics.

This said, let’s invite a simple answer to a complex problem: everyone. Everyone is responsible and/or accountable and therefore we should all ensure they’re having an active outlook based on the remit and capacity of our responsibility and accountability.

As builders/producers

As product people, who facilitate a lot of this work, our main job of continuously asking “why” is immensely valuable here.

We understand the opportunity that the output of data presents in itself: a deeper understanding of the product (how it works in which context), the people (from how it’s going to be presented and who will be impacted the most), and the process (how was the data identified, collected and processed).

Looking at the data steps mentioned above, what would you like to change in your current process? How can you influence it towards a more positive and transparent place?

As platforms

Where there’s a two-way market, there is always the opportunity to have a middle agent that doesn’t produce or consume the information. The places where data is hosted and presented also have their fair share of responsibility.

The lack of accountability shown by a lot of the larger social media platforms, which use our data to predict what we will want to see next, has led to a lot of poor behavior to go unchecked and allowed for a lot of people to be harmed.

While AI and predictive modeling can be incredibly powerful when wanting to better understand user behavior for the purposes of marketing and e-commerce, the same technology is used to suggest the best content to present to users. This daily perpetuation of confirmation bias, at such a large scale, is associated with larger societal issues like the infodemic we have been witnessing over the course of COVID-19.

Incentives/Business models

Yes, there will always be market forces, but incentives and business models have been created and supported by all of us.

We will always want to hit our success metrics, as that’s how we will be compensated and recognized for our efforts. The issue is when the incentives are the source of poor actors and behaviors.

In a very interesting article by the Wire, the perspectives of Tim Berners-Lee, Jimmy Wales, and Wendy Hall are collected about how to save the internet.

One of the most interesting pieces discussed is the internet business model. For the internet to be free, we adopted the advertising business model, where views and clicks pay the bills. As a result, you are permeated with catastrophic and clickbait articles, incentivizing you to click and view all these posts designed for you to keep viewing, clicking, and handing your data over (insert mind-blowing gif here).

As consumers

The consumers here are all the people who are on the receiving end of the output.

From the CEO to the commercial teams, who ask for the results of some predictive modeling exercise. From the people who are directly affected by these predictions to you, the reader, who’s been collecting an immense amount of information about “when tech goes bad”.

It is our responsibility to question these outputs, to better understand them, to raise awareness when things don’t feel right, all the way to bring these up to the policymakers, who are too slow to react to technology advances.

As policymakers

Governmental and legislative bodies can no longer ignore the potential nefarious impact of some of these technologies. It seems that these agencies are the only thing preventing things from getting a lot worse, but they are still quite slow. Agile coach anyone?

Accountability challenges in AI-powered predictive modeling

There are many challenges to this, as you fall into the details.

While you might want to consider a different data source, it might not be viable due to its size. This applies to situations when we refer to a smaller portion of the population, or because the model’s training has a very biased data source already.

We hear of multiple counts of data gender bias, from the most (apparently) unharmful experiments to larger population-wide systemic issues.

There has also been an ongoing debate around technology’s neutrality.

While this can become a complex question to answer, a good level of accountability needs to be defined across the table. From the data selected to work on to the models used and the context in which the information is presented.

Gaining control

This is a complex and wicked problem. No 1500-word article will be able to crack it, no book, person, or organization alone should define what is true for a whole planet that’s supported by technology. This needs to be a conversation grounded on human rights and how we approach these nascent technologies.

What we can do is raise awareness of the fact that it should not be simple, that there should be accountability tracks for when things go “south” and that frameworks, legislation, and systems are in place to curtail some of the negative impacts that might stem from these predictions.

The black mirror test by Roisi Proven (and how some of the most twisted predictions actually came true!). The black mirror exercise asks you to think about the most horrible consequences your product/data might cause. Those horrible thoughts become your “true south” - as in, the things you definitely will want to conscientiously avoid, or prevent from happening. It becomes a useful tool to consider anytime you think of that new data output, and how you’ll share it.

While researching for this piece, an interesting perspective branched through by coming across a rising new role in tech: the tech ethicist. A few organizations like All Tech is Human have been driving awareness to the topic and have a whole platform dedicated to supporting this movement.

We also talked a lot about discussing your data principles & policies with transparency, across the organization. If you are worried about “the loudest voice” influence in the room, consider trying something like the Delphi method. The idea is to get a set of experts to agree on the best way forward, through a series of questionnaires, which you keep presenting back until you reach a consensus.

Like anything else, there are pros and cons to this approach, but consider this as a first step within a wider program of change.

Ultimately, as Maria Giudice puts it in her testimony for Technology is not neutral: “sometimes you don’t know something is wrong until it’s too late”.

If we are all accountable for the impact and its ramifications, we will actively look to fix it in the fastest, most collaborative, and human-focused way possible.

How will you show accountability for your AI-powered predictive models?

Inês Liberato

Read also

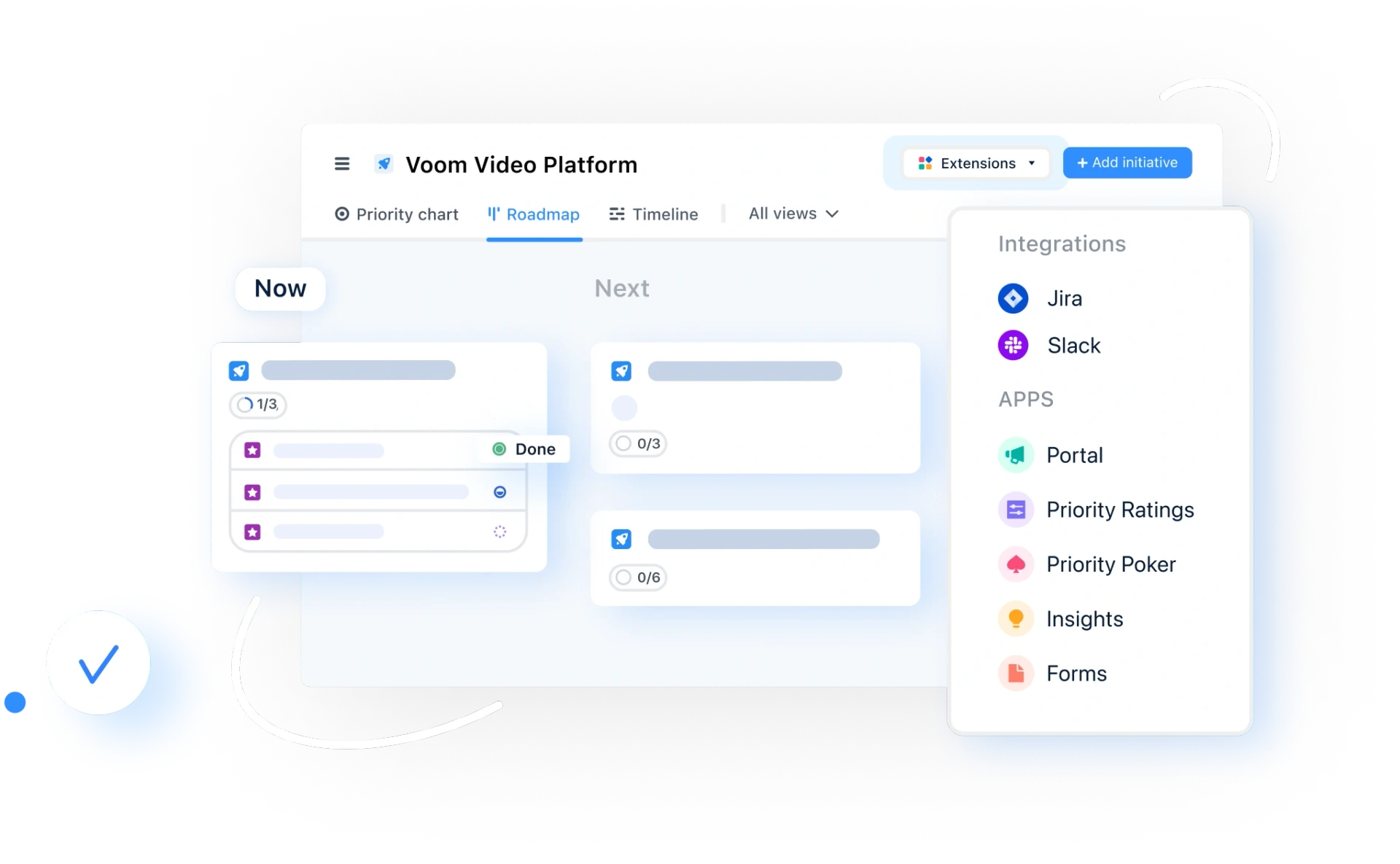

Experience the new way of doing product management