AI hype to AI-ready: A CPO's guide to AI readiness, strategy, and execution

According to Lucid’s AI readiness report, 61% of knowledge workers believe their organization’s AI strategy isn’t aligned with operational capabilities.

AI has become the loudest story in product, but for most product leaders, the real question is far more basic: where do we even start, and how do we avoid wasting efforts on noise instead of impact?

The Lucid report suggests that the real opportunity may be in AI readiness.

Our Chief Product Officer, Dan Lawyer, is a key figure in the company’s integration with AI, bringing 20 years of product leadership experience from companies including Adobe, Ancestry, and Vivint. He sums up the AI transformation challenges simply: “AI can’t be a side quest. It has to be part of your broader product and business narrative, or it will just create expensive distractions.”

We sat down with him to discuss how companies should approach AI, from readiness and roadmap integration to successful execution.

Here are his nine pieces of advice.

1. Build intuition using AI tools

AI readiness starts long before your first “AI feature” hits the roadmap. “Every PM should be deep into their own personal experimentation with AI,” says Dan, “as they would with any new technology, within the guardrails of your company’s policies, of course.” Personal use builds intuition, not just clever prompts, so PMs can tell the difference between a shiny demo and a durable opportunity.

2. Start with your real business problems, not "we need AI"

Dan frames AI’s role in product strategy through three lenses: consuming AI, producing AI, and enabling AI.

“As consumers of AI, your job is to measurably speed up your ability to deliver customer and business value,” he says. For producers, the question is even sharper: “Are you actually speeding up your customers and creating new value, or just shipping AI-flavored features?”

The enabler role is both internal and external. “[Internally] you have to invest in infrastructure, systems, and workflows that allow many teams to work on AI features at the same time without stepping on each other. [Externally] you should be making it easy for your customer to adopt your AI, or to build their own AI-backed solutions using your product.”

On roadmap planning, Dan returns to first principles. “AI is really just a technology – a disruptive one, yes – but still a technology,” he explains. “You’ll get the best outcomes if you start by identifying your business objectives and then assessing what technologies are best to drive them, instead of starting from ‘we need AI’ and hunting for a problem.”

3. Identify the right opportunities

At the organization level, the next step is to move beyond scattered wins and “random acts of success.” How?

Start with what you have

Before overcomplexifying AI adoption, Dan advises teams to start with the basics: “Many common tools that product managers already use have AI-backed features built in. Make sure your organization has authorized their use and that you know what data you’re allowed to leverage in these features.”

Choose where to place your bets

From there, the question becomes about which parts of the product workflow you want to accelerate, and how you’re going to prove it worked. “Agree on what you’re trying to speed up and how you’ll measure it, then establish a baseline before you touch anything,” he says.

Know your domain inside out

Any attempt to automate a workflow, Dan notes, requires a crisp understanding of your current workflows. “A lot of the real process is not documented; it lives in people’s heads,” he explains. That is why readiness means documenting the current state of things, redesigning workflows assuming AI’s capabilities, and only then layering in automation and measurement.

4. Build the unsexy foundations: data, policy, and product ops

Most AI conversations jump too soon to models and features. For Dan, however, it all starts with something less exciting, but extremely important: governance and data. Teams need to know and comply with company policies and external regulations, pay particular attention to PII and customer data, and make their data sets usable by AI. “If your data is a mess, AI will just surface the mess faster,” he says. Clean data, clear policies, and well-understood boundaries are the real foundation.

This is why product ops – or at least product-ops-like thinking – matters. “You don’t necessarily need someone with a ‘product ops’ title,” Dan says, “but you do need people in the product team who care about improving the product function itself.” This entails documenting workflows, codifying best practices, and turning one-off prompt hacks into reusable playbooks. Otherwise, “every PM ends up with their own special prompts, and that’s not scalable,” he adds.

Product managers risk being left behind if they don’t invest in this. “Right now, software engineers are getting the biggest benefits from AI in terms of speed, and UX teams are second in prototyping,” Dan observes. “PMs are often last in line, trying to keep up with everyone else’s new velocity.” That is a signal: If product doesn’t build its own AI literacy and processes, the function stops being the pace-setter.

5. Don’t underestimate the importance of a good roll-out

When companies first move toward AI adoption, Dan sees the same assumptions time and again: “There’s this belief that if we turn it on, people will use it; if people use it, we’ll automatically be more productive, and that we can just ‘automate stuff’ without investing in understanding how things actually work,” he says. When these assumptions run into reality, they create frustration and disappointment.

The CPO points to a growing list of war stories about the last mile of AI automation. “The non-deterministic nature of AI requires a lot of grounding context to get good outcomes,” he explains. That context, however, usually lives in tribal knowledge, edge cases, and unwritten rules. “You have to pull that knowledge out of people’s heads and refine it before AI can use it effectively,” he says. Skipping that work leads to impressive demos but underwhelming rollouts.

6. Identify areas with repetitive work

AI opens endless paths for innovation. But when the reality of circumstances sets in, ideas must be balanced against business, resource, or budget constraints.

Start with your biggest business needs where efficiency is low and manual effort is high. “Look at parts of the business with a lot of repetitive work: support, internal operations, complex manual workflows,” he suggests. Well-known use cases like AI chatbots in customer support can be a good starting point, and he expects to see “an increased number of vertical, domain-specific AI use cases that are easier to adopt and give you initial value.”

7. Don’t treat AI as a sure bet

“Finance leaders are willing to invest in AI because they don’t want to be left behind,” says Dan, “but that doesn’t mean every AI bet is worth placing.”

Measure its worth with two questions:

“How confident are we that this will play out and deliver a return on investment?”

“How impactful will it be if it does?”

The best bets sit at the intersection of acceleration and confidence.

8. Remember this isn’t a one-and-done AI strategy

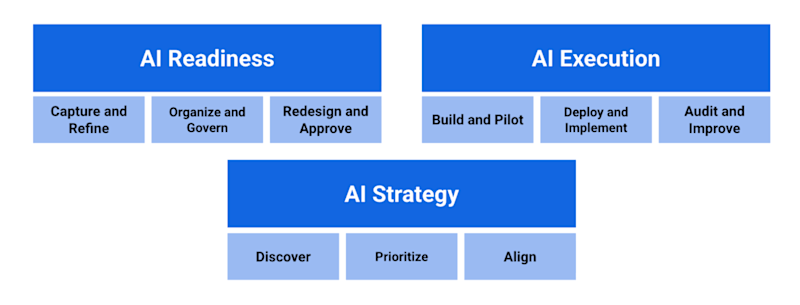

Inside Lucid, AI transformation is viewed as a three-stage loop. “We tend to think of AI transformation as strategy first, then readiness, then execution,” Dan explains. However, he warns, “strategy isn’t a one-time phase. It keeps recurring as you learn. And readiness is the phase people are most tempted to skip.”

9. Create clear definitions of success

To move into execution responsibly, you need a clear definition of success. “If you can’t measure it, you won’t succeed,” Dan says. “Pick the metric that best represents a successful outcome, instrument it, benchmark it, measure it, optimize it.” Measuring the impact of AI uses familiar tools, just with some twists. “The fundamentals don’t change,” Dan says. “We still care about classic product metrics: return usage, influence on business metrics like signups, renewals, virality, and we still need strong qualitative feedback loops and usability testing.”

The twist is that better AI can mean less visible usage. “Some AI applications will improve user value so much that they actually reduce the time and frequency users spend in your product,” he explains. In those cases, you need to lean more heavily on value and outcome metrics – task completion, customer satisfaction, revenue, and retention impact – rather than raw session counts.

At the same time, Dan emphasizes the importance of learning time: “You have to carve out time and provide guidance and tooling so people can play and learn.”

As AI initiatives scale, new problems appear: conflicting patterns, inconsistent UX, governance gaps, poor data. “Your job as a product leader is to find the bottlenecks and friction points that prevent a scaled approach and resolve them,” he notes. That is what turns AI from a handful of experiments into a repeatable capability.

The evolving AI conversation

Looking ahead, Dan sees a gap still dominating the AI conversation. “There’s a gap between the promise of AI, the actual capability of AI, and the value companies are able to extract,” he says. The near future is about closing the gap between capability and value, not just pushing what’s technically possible. “AI is awesome – until it’s not,” he adds. “It’s awesome until it makes a decision that completely ignores your business context.”

Closing that gap comes back to context and knowledge. “You need to ground AI with more context and knowledge about your business,” Dan argues. “The catch is that most of that knowledge is only in people’s heads.” The work for product leaders is to extract, structure, and maintain that knowledge so AI can use it reliably.

Francisca Berger Cabral

Read also

Experience the new way of doing product management

Experience the new way of doing product management